Performance matters whether you tune hardware, run machine learning experiments, or track market reach. Choosing the right approach helps you focus on the metrics that drive decisions, not just a single FPS or score.

Different suites measure different things: power draw, thermals, clock speeds, GPU and CPU utilization, and compute results. Some software covers mobile and desktop, while others target CPUs, GPUs, or full systems.

We’ll match the right tool to the right job — from GPUScore, 3DMark, Unigine, Geekbench, and UserBenchmark to DagsHub, MLflow, Weights & Biases, and Hugging Face. Marketing and SEO options like Brandwatch, Rival IQ, Ahrefs, and Similarweb round out competitive analysis.

Context and reproducibility matter most: versioning, environment parity, and repeatable runs make results actionable. Expect practical advice, real-brand examples, pricing notes, and pitfalls to avoid so your measurements lead to decisions.

Key Takeaways

- Match the right benchmarking solution to the component or use case.

- Look beyond FPS: thermals, power, and compute tell the full story.

- Many platforms offer free tiers; premium features add automation and insights.

- Reproducibility and versioning are essential for trustworthy results.

- Competitive analysis spans social, search, and traffic metrics for market context.

What “performance” really means today across hardware, ML, and marketing

What counts as performance shifts dramatically between hardware labs, ML pipelines, and social dashboards. Each domain uses different metrics to reflect real user impact, so picking the right ones matters more than chasing a single number.

From FPS and thermals to F1 score and share of voice

For hardware, performance covers FPS consistency, power draw, clock speeds, and temperature headroom. These signals show whether a GPU or rig stays smooth under load.

In machine learning, teams track accuracy, precision/recall, and F1 alongside latency and resource use. Those metrics reveal model quality and operational cost.

Marketing performance focuses on engagement rate, reach, impressions, share of voice, and sentiment. These reflect visibility and audience response.

Matching metrics to business goals and user needs

Map 3–5 KPIs per use case—e.g., FPS percentiles, F1 and latency, or engagement rate and SOV. Record environment variables like driver and framework versions so results stay meaningful.

- Stability: users value predictability over peak spikes.

- Context: datasets, devices, and platform changes shift results.

- Continuity: update benchmarks and dashboards as software and market conditions evolve.

How to choose the right benchmarking tool for your system, models, or market

Start by naming the exact outcome you want to measure—frame success in concrete, testable terms. Decide whether you need to validate latency, stability, accuracy, or market reach. That decision narrows your options fast.

Key parameters: device focus, framework compatibility, scalability, and cost

Clarify device scope: CPU, GPU, battery, or full systems, and whether mobile or desktop is the target. For ML, confirm support for TensorFlow, PyTorch, or Scikit-learn.

Weigh scalability and pricing tiers. Free editions can cover basic tests; paid plans add automation, registries, and advanced features.

Cross-platform needs, integrations, and reporting depth

Pick a platform that plugs into your pipelines and dashboards. Look for integrations with Git/DVC, MLflow, or social APIs and exportable, stakeholder-ready visualizations.

When to prioritize reproducibility, automation, or real-time monitoring

Choose reproducibility when experiments must be audited. Choose automation for repeat runs and scheduled reports. Choose real-time monitoring when training or market events need instant alerts.

- Define scope first: what, where, and why.

- Match compatibility to frameworks and device types.

- Run pilots to validate fit before scaling.

Best hardware and system benchmarking tools for GPUs, CPUs, and full rigs

Measure with intent: pick a mix of suites that reveal stability, power, and real-world throughput. No single test tells the whole story, so blend short snapshots with stress runs.

GPUScore by Basemark

GPUScore is ideal when you want cross-platform gaming tests from mobile to high-end desktops. It offers free noncommercial runs and includes ray tracing, making it useful for emerging graphics features.

3DMark and PCMark

These are mature suites many reviewers use. They provide feature tests and stress sequences that help form vendor-agnostic baselines for system and gpu performance.

Unigine

Unigine has free basic editions and a premium option. It’s easy to run, but the last major release predates newer GPU tech, so its relevance is limited for the latest cards.

Geekbench 6 and UserBenchmark

Geekbench 6 gives quick CPU and GPU snapshots across macOS, Windows, Linux, iOS, and Android with a free tier. UserBenchmark is PC-only, runs a single multi-component test, and supplies broad comparison charts for desktop users.

- Mix tests: use 3DMark feature runs with GPUScore ray tracing to triangulate results.

- Track metrics: capture average and percentile FPS, power draw, thermals, and clock behavior.

- Record versions: log OS, driver, and software versions and retest after updates.

Machine learning benchmarking and experiment tracking platforms

Good experiment tracking captures both model scores and the environment that produced them. That clarity turns scattered runs into reproducible, actionable results for any project.

DagsHub unifies Git, DVC, and MLflow so teams track code, data, and experiments together. It includes a model registry, pipelines, and collaboration features that simplify reproducibility and custom metrics.

MLflow is a solid foundation for experiment logging. Track parameters, F1, precision/recall, artifacts, and use the Model Registry to promote versions across staging and production.

Weights & Biases shines for real-time monitoring, dashboards, and hyperparameter sweeps. Use it when fast iteration and team visibility matter, but weigh cloud costs and logging overhead.

Hugging Face Transformers speeds NLP benchmarking with pre-trained models, fine-tuning scripts, and standard evaluations like GLUE and SQuAD. Combine these with versioned data for repeatable results.

- Recommendation: pick DagsHub for end-to-end projects that need Git + DVC + MLflow.

- Use MLflow for on-prem tracking and model promotion.

- Choose W&B for live dashboards and rapid sweeps, and Hugging Face for NLP evaluation.

- Always record GPU/CPU utilization and environment snapshots for fair performance checks.

Competitor benchmarking tools for social, SEO, and web intelligence

Competitive intelligence covers social chatter, organic search, and web traffic — and each channel needs a different set of metrics.

Brandwatch shines for large-scale social performance. Its Benchmark module tracks engagement, reach, impressions, and share of voice across 100,000+ brands and adds AI-driven insights and real-time alerts as part of a full social media suite.

Rival IQ, Phlanx, and Talkwalker focus on engagement and alerts. Rival IQ automates leaderboards and anomaly detection across major platforms. Phlanx audits influencer engagement on YouTube, X, and Twitch. Talkwalker blends listening, sentiment, and image recognition for cross-channel monitoring.

Ahrefs and Semrush provide SEO-focused data: keyword gaps, backlinks, traffic sources, and SERP analysis. Semrush also includes ad and social tracking when you need a unified platform for market monitoring.

- Sprout Social — side-by-side social metrics and publishing.

- BuiltWith — tech stack history and adoption signals.

- Crayon — centralized site updates, news, and competitive insights.

- Similarweb — traffic sources, geography, and market share trends.

Pick solutions that match your workflows, prioritize integrations and reporting, and set alerts so your team can act on timely insights.

Benchmark tools comparison: mapping use cases to the right platforms

Choose a platform that aligns with your task: gaming stress, ML production, or market visibility. Start by naming the exact outcome you need to measure. That focus narrows the field quickly.

Hardware/system tests vs. ML experiments vs. market analysis

Hardware work needs cross-platform runs and thermals. Use GPUScore or 3DMark for gaming and stress scenarios. Add Geekbench 6 for quick CPU/GPU reads and UserBenchmark or Unigine for fast sanity checks.

Machine learning demands reproducibility and model registries. Pick DagsHub when you need Git+DVC+MLflow. Choose MLflow for on‑prem tracking and W&B for live dashboards and sweeps. Use Hugging Face for standard NLP evaluations.

Market analysis prioritizes reach and sentiment. Brandwatch covers broad monitoring and SOV. Use Rival IQ for social leaderboards and Ahrefs or Semrush for keyword and traffic visibility.

Which metrics matter for gaming, production ML, and brand performance

- Gaming: FPS percentiles, power draw, and thermals — use cross‑platform runs for parity.

- Production ML: F1, latency, and resource use — log environment and dataset versions.

- Brand performance: engagement rate, share of voice, and sentiment — ensure channel coverage matches goals.

Balance depth and speed: quick suites give fast reads, while full platforms deliver richer insights and collaboration features. Pilot a short list per task, score each platform for metrics relevance, integration effort, and reporting quality, then standardize on the best fit.

Critical metrics to track and why they differ by platform

Metrics tell different stories across hardware labs, training clusters, and marketing channels. Pick signals that match what you must improve: stability, accuracy, or audience impact.

Hardware: real stability and efficiency

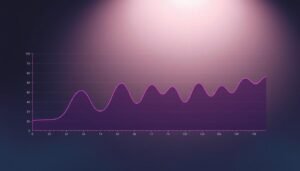

Track more than average FPS. Add 1% and 0.1% low FPS, power draw, thermals, clock behavior, and compute scores to show stability and performance per watt.

Machine learning: quality and cost

Log accuracy, precision/recall, and F1 for class balance. Add latency, throughput, and GPU/CPU/memory usage so you balance effectiveness with resource efficiency.

Marketing: reach, sentiment, and quality

Measure engagement rate, reach, impressions, share of voice, and sentiment. Those capture volume and audience quality across time zones and networks.

- Standardize definitions and time windows so trends stay comparable.

- Capture environment: drivers/OS for hardware; dataset and model versions for ML; network coverage and time zones for social checks.

- Use composite dashboards (FPS vs watts, F1 vs training time, SOV vs sentiment) and build alerts for anomalies.

Close the loop: link metrics to actions—tune fan curves, refine labels or hyperparameters, and adjust posting schedules to turn data into results.

Cross-platform benchmarking: ensuring apples-to-apples comparisons

Apples-to-apples checks start with a clear device taxonomy and locked test versions. Define categories (smartphone, laptop, desktop) and pick cross-platform runs like GPUScore when you need mobile-to-desktop parity.

Standardize the environment: pin OS, driver, firmware, and benchmark versions. Document resolution, presets, and power profiles so system-to-system comparisons stay fair.

Device categories, versions, and standardized tests

Record cooling, TDP limits, and power mode—these change thermals and sustained performance. Run multiple passes, average results, and log outliers to build statistical confidence.

Dataset versioning and environment parity for ML

Version code and data with Git/DVC, and log framework, CUDA/cuDNN, and runtime versions with MLflow or a registry. Use consistent train/validation/test splits and note data drift so model runs remain reproducible.

- Lock versions: software, drivers, and test suites.

- Record configs: hardware, presets, and sample sizes.

- Changelog: keep a running list of anomalies and changes.

Free vs. paid: what you get at each tier

Free editions help you start measuring real-world performance without a big budget. They let small teams validate basics and prove value before buying. That approach reduces risk and clarifies which advanced features matter most.

Free noncommercial GPU tests, limited feature access, and trial dashboards

Many suites offer a usable free tier. GPUScore offers free noncommercial runs, Geekbench 6 provides cross-platform snapshots, and Unigine and 3DMark have basic free editions.

Free plans let you capture core metrics, sanity-check hardware, and run quick experiments. Limits often appear as restricted stress tests, lower historic data retention, or export caps.

When premium features (alerts, AI insights, registries) pay off

Paid subscriptions buy automation, APIs, and richer reporting. For ML, registries and W&B sweeps speed iteration. For marketing, Brandwatch or Rival IQ add real-time alerts and AI-driven insight.

- Reporting upgrades: stakeholder-ready dashboards, scheduled exports, and custom formats.

- Integration: API access and Slack/CI links automate workflows and reduce manual work.

- TCO: factor setup, governance, and maintenance—paid tiers can cut ongoing labor.

Start with a crawl-walk-run plan: test free options, set success criteria, then upgrade when paid features save measurable time or improve accuracy. Match tiers to team size and needs, review subscriptions quarterly, and avoid overlapping purchases so each suite fills a distinct role.

Common pitfalls and how to avoid misleading results

Many projects trip up when a single score becomes the headline instead of the full context.

Don’t chase a lone metric. Average FPS or a single accuracy number can mask throttling, class imbalance, or spikes. Track supporting metrics like power draw, thermals, utilization, and latency so you see the full performance story.

Outdated tests and selection bias

Old benchmarks can mislead. For example, dated Unigine releases miss modern GPU features and new workloads.

Pick current suites and log software, driver, and firmware details. Avoid cherry-picking games, datasets, or channels that favor one outcome.

Data drift, reproducibility, and configuration mismatches

Machine learning projects must watch input shifts and ground-truth changes. Monitor data and refresh baselines or retrain when performance drops.

Enforce reproducibility: version code and data, capture environment settings with DVC or MLflow-like logging, and standardize power modes, fan curves, and driver settings.

- Run multiple passes, remove outliers, and report confidence intervals.

- Contextualize marketing spikes with sentiment and content checks before acting on results.

- Document caveats clearly so stakeholders understand limits and avoid overgeneralizing.

- Update your benchmark set regularly to reflect new software and platform news.

Building a repeatable benchmarking workflow for teams

Start small and standardize every step so your team can repeat results without guesswork.

Make a single source of truth: version code, data, and configs with Git + DVC and log experiments with MLflow or DagsHub. This combination captures runs, model registry entries, and pipeline history so each project stays reproducible and auditable.

Versioning code, data, and configs; automating pipelines

Automate recurring runs: schedule hardware suites like 3DMark or GPUScore, trigger ML pipelines, and pull marketing data on a cadence. Standardize presets and parameters to cut manual error and speed up monitoring.

Collaboration, reporting cadence, and stakeholder-ready visuals

- Shared dashboards: use W&B or the MLflow UI for ML teams and Brandwatch or Rival IQ reports for marketers.

- Reporting cadence: weekly trend snapshots, monthly deep dives, and real-time alerts for anomalies.

- Team process: assign owners, review PRs for benchmark changes, and annotate dashboards with decisions.

Track environment metadata (OS, drivers, frameworks) and template tests so new projects adopt consistent metrics. Close the loop by feeding outcomes into backlogs—tuning hardware, refining data, or updating software and campaign plans.

What’s next: trends shaping performance benchmarking

The future ties live monitoring to AI-driven explanations so teams act faster. Real-time signals will flag anomalies and suggest root causes across hardware, ML, and marketing.

AI-assisted insights, real-time monitoring, and cross-environment tests

AI will auto-explain spikes — Brandwatch already surfaces competitor trends and W&B offers live experiment sweeps. Expect platforms that highlight causation, not just correlation.

- Real-time monitoring becomes standard across ML, marketing, and hardware labs.

- Cross-environment tests will expand: mobile-to-desktop graphics runs (GPUScore) and multi-framework model parity.

- Richer stress and feature tests (3DMark) will model ray tracing and modern upscaling pipelines.

- Privacy and governance will push teams to balance cloud convenience with self-hosted solutions for compliance.

Automation and APIs will link runners, experiment logs, and market feeds into end-to-end solutions. Visuals will shift to decision-ready views that show trade-offs, uncertainty, and recommended next steps.

Your next step to a smarter performance baseline

Begin with a clear question: what performance signal matters to your users and which device or domain you must measure? Define that outcome, then pick device-specific or cross-platform suites like GPUScore, 3DMark, Geekbench, DagsHub, MLflow, W&B, Brandwatch, Ahrefs, and Similarweb to match the need.

Shortlist one or two solutions per domain and run a week-long pilot with fixed configs. Document versions, datasets, and settings from day one so results stay reproducible.

Use free tiers to validate fit, then upgrade when a paid plan saves time or adds registries or alerts. Build a shared dashboard stakeholders can scan, schedule monthly baseline refreshes, and run a quarterly review.

Your next step: pick a domain, run one controlled test this week, and share a one-page summary that ties metrics to clear next actions.